Implementing an image transcoding solution yourself

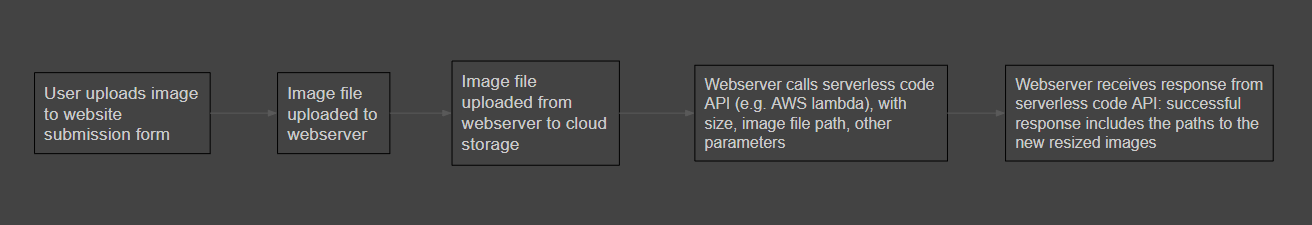

If you are going to implement your own image transcoding solution, the first thing to understand is the basic infrastructure and execution flow.

Infrastructure

As a reminder of the basic execution flow that was shown earlier:

Your website is going to involve uploading to cloud storage even if you don't build your own image transcoding solution, so the only parts you particularly need to do here are implementing your cloud transcoding function, and understanding how to use the API for your serverless code provider to invoke that function.

Invoking serverless code functions

For most providers, there is some API method of doing this. Here I will cover AWS Lambda since I'm familiar with it, but some of the principles likely apply to other providers as well.

Your webserver needs to be able to authenticate itself to AWS Lambda, usually in initialising the API client it will use. In PHP initialising the Lambda client looks like this:

$lambdaAdminClient = new Aws\Lambda\LambdaClient([

'credentials' => [

'key' => '<the Access Key for the IAM role you want to use to invoke the function>',

'secret' => '<the Secret Key for the IAM role you want to use to invoke the function>',

],

'region' => '<the region of your function, e.g. eu-west-3>',

'version' => '<the API version to use for AWS Lambda - these are dates specified in the documentation, e.g. 2015-03-31>',

]);It's best to create an IAM user whose specific job is for running Lambda functions, rather than e.g. using some 'superuser' IAM role with a huge amount of permissions. Firstly because it's security best practice not to have unnecessary permissions, but also so that the user gets an error if you unintentionally make it do something it isn't supposed to do.

Do not store these credentials on the webserver itself! This shouldn't need to be said, but I'm saying it anyway. For AWS, The Parameter Store within the Systems Manager service is a good option for securely storing credentials; you could also store them in your database server.

Example Permissions Policy for the IAM User

Here is an example policy - see the notes for important details to pay attention to.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"lambda:CreateFunction",

"lambda:UpdateFunctionCode",

"iam:PassRole",

"lambda:InvokeFunction",

"lambda:GetLayerVersion",

"lambda:UpdateFunctionConfiguration",

"lambda:DeleteFunction"

],

"Resource": [

"arn:aws:iam::<your AWS account number>:role/<the name of the Lambda execution role that the function is executed with>",

"arn:aws:lambda:<the AWS region of your Lambda function>:<your AWS account number>:function:<the name/s of the function this user is allowed to execute>",

"arn:aws:lambda:<the AWS region of your Lambda function>:<your AWS account number>:layer:*:*"

]

}

]

}Notes

- The function name you specify in the Resources array can include wildcards. I would suggest naming functions with some prefix, e.g. "Your-Website-FunctionName" so that you can put "Your-Website-*" in this field, letting your user run only functions that have that prefix and not any others.

- The Lambda execution role is not the same as this IAM user - it is its own Role entity in the IAM console. You need to give this role its own set of permissions to do its job, which is covered later.

- The iam:PassRole permission is necessary here, because under the hood your IAM user cannot execute the Lambda function itself, even though it has the permissions to do so. It has to be able to "pass" to the Lambda execution role you specified in the Resources array.

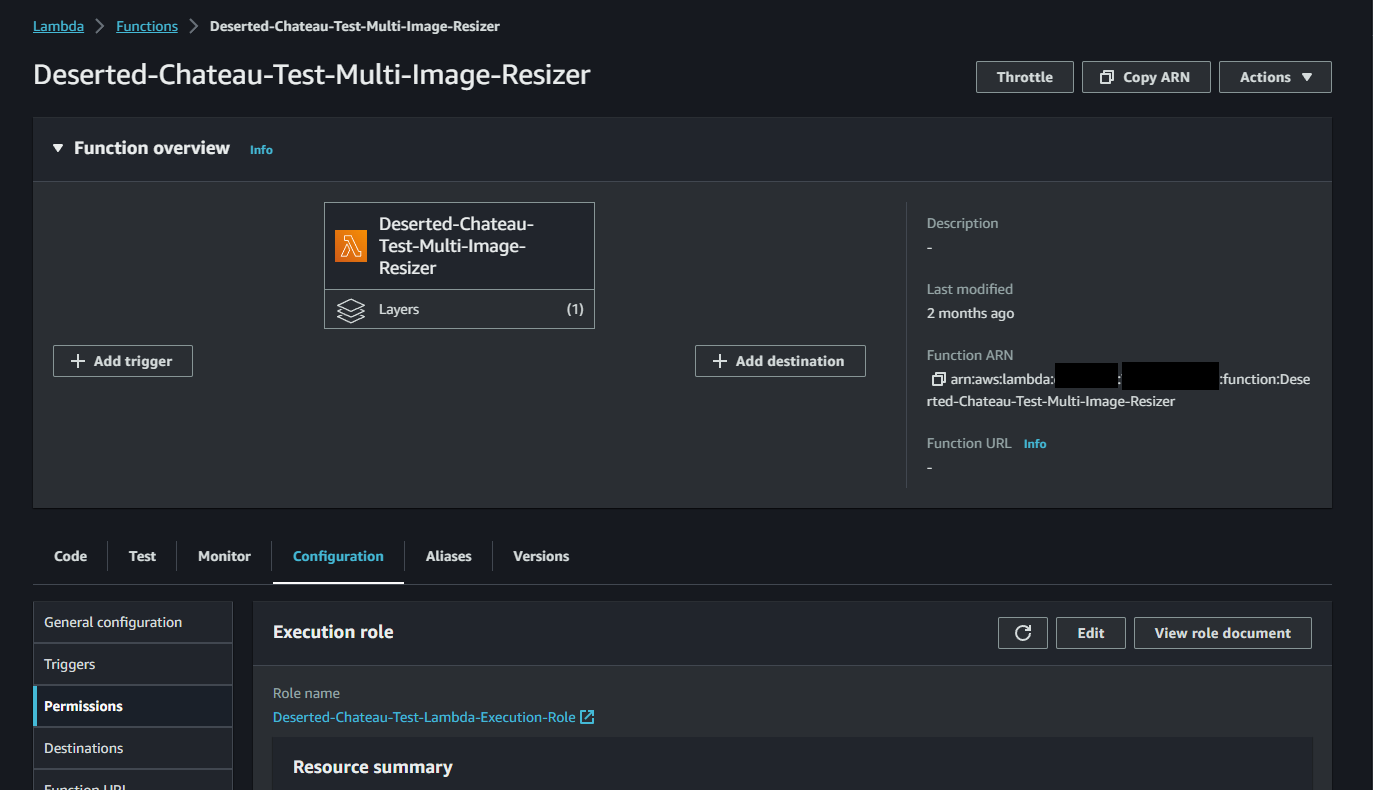

The Lambda "execution role"

Whenever your Lambda function is run, it runs with the permissions of a specific role, the execution role specified for that function. Here's an example from Deserted Chateau's test environment.

This role is entirely separate from the IAM user your webserver uses to request the Lambda function be run. You can modify the role the function uses either after creating it, or when creating it. You will need to modify the permissions of the role you use in the IAM console, under Access Management -> Roles.

Generally speaking, your Lambda execution role is going to need more than one permissions policy (it's messy to try and put all permissions you need in one policy).

Example Basic Permissions Policy for the Lambda Execution Role

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"ec2:CreateNetworkInterface",

"ec2:DescribeNetworkInterfaces",

"ec2:DeleteNetworkInterface"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "logs:PutLogEvents",

"Resource": "arn:aws:logs:*:<your AWS account number>:log-group:*:log-stream:*"

},

{

"Sid": "VisualEditor2",

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:CreateLogGroup"

],

"Resource": "arn:aws:logs:*:<your AWS account number>:log-group:*"

}

]

}Notes

- You only need the EC2 network interface permissions if your execution role is going to be used for invoking, or creating, functions that will run within a VPC. Without those permissions, functions that need to run in a VPC won't work.

Example Additional Permissions Policy for the Lambda Execution Role

This policy is specifically so our execution role can perform S3 operations (as it needs to be able to get the image files from S3 to resize them, and then put the new resized versions back into S3).

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::<AWS bucket name 1>/*",

"arn:aws:s3:::<AWS bucket name 1>",

"arn:aws:s3:::<AWS bucket name 2>/*",

"arn:aws:s3:::<AWS bucket name 2>",

"arn:aws:s3:::<AWS bucket name 3>/*",

"arn:aws:s3:::<AWS bucket name 3>"

]

}

]

}Notes

- For every bucket your Lambda execution role needs permissions to, you need to add two lines in the Resource area. One - with the /* wildcard - gives access to all items in the bucket, the second gives permissions to the root of the bucket.

Now, we can start looking at the cloud function itself, which will perform the resizing.

Serverless code function for performing image resizing and re-encoding

Before writing the code itself, we first need to understand some critical concepts: performance, image quality, encoding, and image dimensions.

Performance

The speed of your image transcoding function is crucial. The longer it takes, the longer a user has to wait before you can finish processing their images and show their artwork or any other image file (and the more processing costs you will incur).

For best security practices, you also want to avoid showing the user the original image file they uploaded in the submission form. Doing so requires that image to be publicly available to the Internet, even for a brief period, which isn't ideal. Instead, you should resize the image to a small size (e.g. 640x480 or similar) and show the user that, preventing their full-size upload from ever being made publicly visible. As a result, being able to do this quickly is very important.

For best performance, you need to use a highly performant library, which usually means using one that relies on libvips internally. sharp is an excellent option, but there are likely others. You will also need to understand what encoders to use, particularly when working with JPEGs.

Image dimensions

When transcoding artworks proactively (i.e. not lazily, on-demand, as a CDN solution does), you need to make sure you have the image in a few different sizes for displaying efficiently. For an art website, you may have a 4K version of the image for subscribed users, a 1080p version for normally viewing an artwork on its own in a details page, a 480p version for thumbnails in galleries, and perhaps a 240p version as a preview image in notifications areas.

Your image transcoding solution should not do all of these tasks sequentially in one function invocation. Instead, your webserver should call your transcoding function one time for each size it needs to make, and wait for all results to finish. Since these can all be done in parallel, the whole operation only takes as long as the longest transcoding operation (typically the 4K one if it is required). Since the larger transcoding operations take more time, avoid transcoding an image to e.g. 4K if it's not already bigger than 4K - while you can tell sharp or other libraries to not increase an image's size, it's still better to avoid performing any resize in the first place so that you don't unnecessarily make the user wait (and consume Lambda execution time).

Example code

Here's some example code I made to illustrate a basic image resizing function, to run in a Node.js environment.

"use strict";

const sharp = require("sharp"),

S3 = require("aws-sdk/clients/s3");

/*

* Notes: do not enable a VPC for this function. Set the Node.js version to 16.x,

* and architecture to x86_64. It's possible to run this on ARM, but compiling the

* Sharp binaries is a pain and it runs around 60-80% slower (meaning it'll cost more).

*

* Compiling the Sharp binaries for x86_64 is fairly easy - you can create

* a Lightsail Node.js instance ($3.50 server will do), and compile Sharp

* via npm install on that.

*/

// process.env is determined by your Lambda function's environment variables,

// i.e. create an environment variable S3_AWS_REGION with a value corresponding to

// whatever AWS region you are using.

// Regions are in the usual 'eu-west-1' or 'us-east-2' form.

// We don't need credentials here; our execution role has the necessary permissions.

const s3 = new S3({

apiVersion: process.env.S3_API_VERSION,

region: process.env.S3_AWS_REGION

});

exports.handler = async (event) => {

// Example JSON payload:

/*

* {

* "parameters": {

* "imageKey": "something.jpg",

* "imageWidth": 500,

* "imageHeight": 500

* }

* }

*/

const imageKey = event.parameters.imageKey;

const width = event.parameters.resizeImageWidth;

const height = event.parameters.resizeImageHeight;

var inputBucket = process.env.S3_INPUT_BUCKET;

var outputBucket = process.env.S3_OUTPUT_BUCKET;

// Load the original image from S3

try {

const params = {

Bucket: inputBucket,

Key: imageKey,

};

var originalImage = await s3.getObject(params).promise();

} catch (error) {

console.log("Failed to retrieve original image from S3: " + error.message);

return;

}

const imageBody = originalImage.Body;

// Get the metadata for the image, such as width, height, format etc.

let imageMetadata = await sharp(imageBody).metadata();

let outputFormat = "jpg";

let outputFormatMimeType = "image/jpg";

if (imageMetadata.format === 'png' && imageMetadata.hasAlpha()) {

// If the original image has transparency (alpha channel), we want to preserve it.

// Therefore, we will save it as a PNG instead of JPG, as JPG does not support transparency.

outputFormat = "png";

outputFormatMimeType = "image/png";

}

let resizeDimensions = {

width: parseInt(width, 10),

height: parseInt(height, 10),

};

// First, resize the image and put it into a buffer.

let resizedBuffer = await sharp(imageBody)

.resize(

Object.assign(resizeDimensions, {

// Ensures the image is never enlarged if for whatever reason

// your width and height parameters are larger than the image's

// actual size.

withoutEnlargement: true,

// 'Inside' preserves aspect ratio, will resize the image to

// fit within the width and height specified (maximum).

fit: 'inside',

kernel: 'lanczos3',

})

)

.toBuffer();

let bufferData;

// Encode the image into its new format.

if (outputFormat === 'png') {

bufferData = await sharp(resizedBuffer)

.toFormat('png')

.png({

compressionLevel: 9,

palette: true,

})

.toBuffer();

} else {

bufferData = await sharp(resizedBuffer)

.toFormat('jpeg')

.jpeg({

quality: 90,

// 4:4:4 means no colour loss, effectively disabling chroma subsampling.

chromaSubsampling: '4:4:4',

// Comment line below if you do not want MozJPEG encoding.

// MozJPEG runs slightly slower but results in lower filesize for

// the same quality setting.

mozjpeg: true,

})

.toBuffer();

}

// Upload the new image to the destination bucket

try {

await s3.putObject({

Body: bufferData,

Bucket: outputBucket,

ContentType: outputFormatMimeType,

Key: imageKey,

}).promise();

} catch (error) {

console.log("Put object failed: " + error.stack);

}

return "success";

};Notes about the example code

To use sharp here, we must include it somehow; it is not normally available in the Node runtime environment, and for serverless code, there is normally a separate functionality for importing code modules to be available for execution, which is explained in the next section.

This particular example function doesn't return the S3 key to the new image, but generally you should (since, unless it's always a predetermined format, the webserver will not know where the new images are, or whether an error prevented creating one of them, etc).

Lambda Layers

In AWS Lambda, the system for importing external code libraries to be available for your function at execution time is known as Layers. You need to create a Layer for the sharp library, so that it can be included with your function at runtime (or else it won't be able to find the sharp library).

This means you need to compile the sharp library for the particular architecture of your serverless code provider. On AWS Lambda, the two architectures are x86_64 and ARM; I recommend not using ARM, as while it's cheaper, it does not perform well in testing, at least where sharp is concerned.

Compiling Sharp for x86_64 architecture

To use sharp properly, we need to compile it for the x86_64 architecture it will run on. To do this, create a Node.js AWS Lightsail instance (a $3.5 tier server will do the job just fine). Configure PuTTY and WinSCP to connect to it.

Create a folder in your Lightsail instance's home directory, e.g. "compilingsharp" or such like, and then run the following command inside that folder:

npm i sharp

rm package.json

rm package-lock.json

zip -r sharp.zip .This installs sharp (i is shorthand for install, i.e. npm install sharp), we remove the unneeded JSON manifest files, and the zip command will zip up the current directory, which has the resulting node_modules folder. Download this zip file from the server via WinSCP, and now you have Sharp ready to upload as a Lambda layer. You can shut down and remove the Lightsail server at this point in the console if you created it just for this purpose, as it's no longer needed.

You can now upload the zip to Lambda -> Layers in the same region as your function. Make sure it has the correct runtimes (Node.js, 16.x and 18.x at the time of writing), so it can be included in the list of layers available to your function using the same runtime.

Invoking the Lambda function from your webserver

Utilising your new function efficiently means calling it asynchronously from your webserver. This can be a little confusing, as there is also the question of whether to invoke the code itself asynchronously or not.

In AWS Lambda for example, you can invoke a function synchronously with either the "RequestResponse" or "Event" parameters, or invoke it asynchronously. "RequestResponse" is like a normal synchronous function invocation: your webserver will wait until it gets a response back from your Lambda function. "Event" is synchronous but behaves similar to asynchronous code, with one key difference: it doesn't wait for a response. Your webserver invokes the function, but doesn't wait for a reply, so you can't do anything when the code finishes.

What we need to do is invoke our code asynchronously, and also utilise the async properties of whatever language we are using. This way, we can invoke our function several times to cover all the transcoding we need to do, so that they execute concurrently, but wait until the results of all of the invocations are completed.

In PHP this is rather unwieldy-looking, but the pseudocode is like this:

$allPromises = [];

$errorArray = [];

foreach ($newArtworkSizes as $artworkSize) {

$input['parameters']['newArtworkSizes'] = [$artworkSize];

$artworkInput = json_encode($input);

$promise = $lambdaAdminClient->invokeAsync([

'FunctionName' => '<the name of your Lambda function>',

'Payload' => $artworkInput

]);

$promise->then(

function ($value) use (&$resultArray) {

$resultArray[] = $value;

},

function ($reason) use (&$errorArray) {

$errorArray[] = $reason;

}

);

$allPromises[] = $promise;

}

Promise\Utils::all($allPromises)->wait();Notes

How the input parameters are formatted depends on your function. What we are doing here is creating an array of Promise objects, so that we can wait for all of them to finish before returning the results to the user, whilst letting them all execute at the same time.

function ($value) use (&$resultArray)This weird looking syntax in the promise's then function is to do with variable scope. The function call inside $promise->then is an anonymous function; it does not normally have access to the $resultArray variable in the main scope. The & symbol allows it to have access to that variable, or else you will end up with an empty result array no matter what you add in the anonymous function.

By using this approach, it doesn't matter if we are transcoding 1 image, 5 images, or whether each image has 1 version or 5 versions to transcode (e.g with 5 images needing 5 resized versions, you'd have 25 transcoding operations to perform). Since your webserver is making 25 requests to run this code concurrently, all the transcoding operations happen at the same time, so the user only has to wait for as long as the longest operation takes to finish.